Product design metrics

CAIR Metric (Confidence in AI Results)

The real question isn’t whether your AI is good enough — it’s whether your users are confident enough to use it.

Source: LangChain Blog – The Hidden Metric That Determines AI Product Success

Most AI products don’t fail because the model is inaccurate — they fail because users don’t trust the output, or because fixing errors feels too costly or too risky.

The CAIR metric reframes how we measure readiness:

- Value: What users gain when the AI works well — such as saved time, faster workflows, new insights, or creative boosts. High value raises the incentive to use the product.

- Risk: The consequences of failure — ranging from minor inconvenience to serious financial, legal, or reputational harm. Higher risk makes adoption less likely.

- Correction: The effort required to detect and fix errors — whether it takes seconds (undo) or hours (specialist review). The more correction effort required, the less likely adoption will scale.

👉 Implication: An 85% accurate AI in a high-CAIR design will outperform a 95% accurate AI in a low-CAIR design, because users will actually adopt the former with confidence.

Practical Implications for AI Products

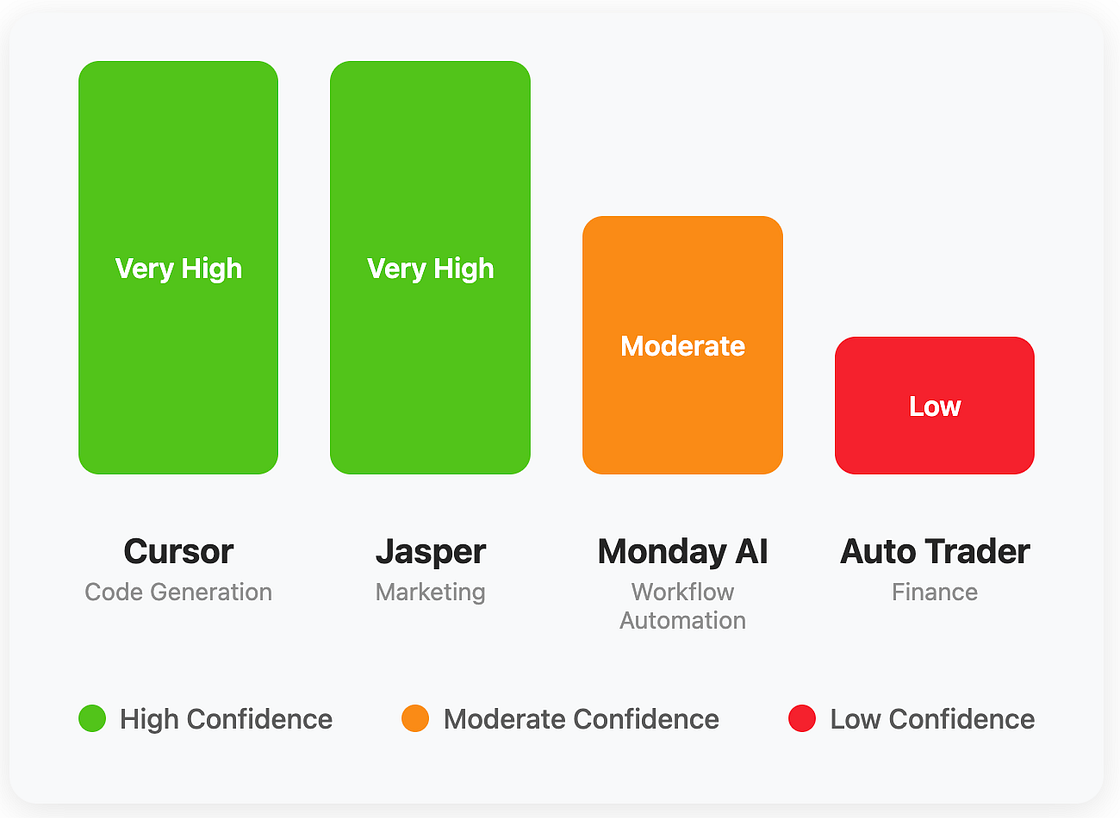

CAIR highlights why some products thrive while others stall, even if the underlying models are similar:

-

High-CAIR products thrive because they balance usefulness with low correction effort and manageable risk.

- Example: Coding assistants like Cursor or GitHub Copilot. Even if suggestions are sometimes wrong, developers can instantly see, edit, or roll back changes. The risks are limited (compile errors, minor bugs), correction is cheap (simple edits), and the value is high (time saved, creative input). This balance explains their rapid adoption.

-

Low-CAIR products struggle because hidden or costly errors outweigh the apparent value.

- Example: AI-generated legal contracts. Even small inaccuracies can introduce significant legal risks. Detecting mistakes requires expert review, which often cancels out the value of automation. As a result, adoption remains low despite advances in accuracy.

-

Medium-CAIR products need careful framing. Domains like healthcare, compliance, or finance can succeed only if paired with guardrails, human oversight, and transparency. Otherwise, the perceived risks will overwhelm adoption.

👉 Reframing development: Instead of only asking “Is the AI accurate enough?”, product leaders must ask “Is the CAIR high enough for adoption?”

Source: The Hidden Metric That Determines AI Product Success

The Five Principles of CAIR Optimization

Analysis of successful AI products points to five design principles that consistently raise CAIR. These principles are not theoretical — they are validated across industries and use cases:

- Strategic human-in-the-loop (optimises all three factors) Oversight must be added with precision. Requiring approval for every suggestion kills productivity (lowers Value), but placing checkpoints before irreversible actions keeps Risk low while preserving utility. The art lies in deciding where human involvement maximises CAIR with minimal Value trade-off.

- Reversibility (reduces Correction effort) Clear undo options, rollback mechanisms, and version history dramatically reduce the cost of correcting mistakes. Beyond practicality, reversibility provides psychological safety. When users know they can always reverse a step, their willingness to explore and adopt AI increases.

- Consequence isolation (reduces Risk) Safe experimentation environments such as sandboxes, preview modes, and drafts separate testing from real deployment. This allows users to “try before they trust,” cutting down fear of harmful consequences. Sandboxed designs have been shown to multiply adoption rates compared to live-only modes.

- Transparency (reduces Risk and Correction effort) Showing sources, reasoning, or confidence scores helps users assess whether results are trustworthy. It also enables targeted correction (fixing a wrong assumption or data source) instead of discarding entire outputs. This dramatically improves repeated use and trust.

- Control gradients (increase Value while managing Risk) Giving users the ability to adjust the level of autonomy lets them gradually adopt higher-value features. A beginner might use the AI only for suggestions, while an advanced user can enable semi-automation. This progressive exposure builds trust and maximises long-term Value.

Guiding Questions for Product Teams

CAIR shifts AI product design away from purely technical benchmarks to adoption-centric thinking. To assess readiness, teams should ask:

- How easily can users detect and correct AI mistakes?

- How severe are the consequences of AI errors in this context?

- How much measurable value does successful AI completion provide?

- How much oversight and control do humans retain at key decision points?

- How clearly are limitations and risks communicated to end users?

Takeaway

The companies that will succeed in AI are not necessarily those with the most advanced models, but those that engineer confidence through design.

- High CAIR → adoption, even with imperfect models.

- Low CAIR → stagnation, even with highly accurate models.

- Success lies in thoughtful product design: combining guardrails, oversight, transparency, and reversibility with AI capability.