ELB

Amazon Elastic Load Balancing (ELB) is an AWS service that automatically distributes incoming application traffic across multiple EC2 instances or IP addresses. It helps ensure high availability, fault tolerance, and scalability of your applications by efficiently managing traffic and automatically routing it to healthy instances. ELB offers three types: Application Load Balancer (ALB), Network Load Balancer (NLB), and Classic Load Balancer, each tailored to specific use cases, from routing HTTP/HTTPS traffic to handling TCP/UDP connections. ELB monitors the health of instances and adjusts traffic distribution, enhancing the performance and reliability of your applications.

ALB

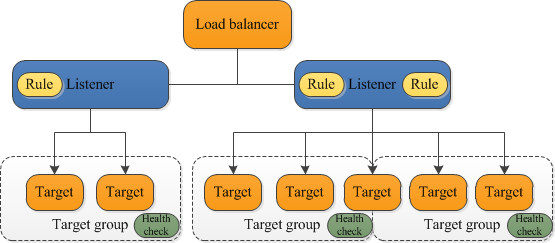

An Application load balancer distributes incoming application traffic across multiple targets, such as EC2 instances, in multiple Availability Zones. A listener checks for connection requests from clients, using the protocol and port that you configure. The rules that you define for a listener determine how the load balancer routes requests to its registered targets. Each rule consists of a priority, one or more actions, and one or more conditions.

source: https://docs.aws.amazon.com/elasticloadbalancing/latest/application/introduction.html

NLB

NLB is for load balancing of TCP/UDP traffic where extreme performance is required. Operating at the connection level (Layer 4), NLB routes traffic to targets within Amazon VPC and is capable of handling millions of requests per second while maintaining ultra-low latencies. NLB is also optimized to handle sudden and volatile traffic patterns.

A UDP load balancing configuration is often used for live broadcasts and online games when speed is important and there is little need for error correction. UDP has low latency because it does not provide time-consuming health checks, which makes UDP the fastest option.

Connect NLB to Bastion host

You need to remember that Bastion Hosts are using the SSH protocol, which is a TCP based protocol on port 22. They must be publicly accessible.

Here, the correct answer is to use a Network Load Balancer, which supports TCP traffic, and will automatically allow you to connect to the EC2 instance in the backend.

An ALB only supports HTTP traffic, which is layer 7, while the SSH protocol is based on TCP and is layer 4. So, the Application Load Balancer doesn't work.

Targeting options

There are 2 options in NLP to route request

-

If you specify targets using an instance ID, traffic is routed to instances using the primary private IP address specified in the primary network interface for the instanc. The load balancer rewrites the destination IP address from the data packet before forwarding it to the target instance.

-

If you specify targets using IP addresses, you can route traffic to an instance using* any private IP address from one or more network interfaces*. This enables multiple applications on an instance to use the same port. Note that each network interface can have its security group. The load balancer rewrites the destination IP address before forwarding it to the target.

A private IPv4 address is an IP address that's not reachable over the Internet. You can use private IPv4 addresses for communication between instances in the same VPC.

When you launch an EC2 instance into an IPv4-only or dual stack (IPv4 and IPv6) subnet, the instance receives a primary private IP address from the IPv4 address range of the subnet. For more information, see IP addressing in the Amazon VPC User Guide. If you don't specify a primary private IP address when you launch the instance, we select an available IP address in the subnet's IPv4 range for you. Each instance has a default network interface (eth0) that is assigned the primary private IPv4 address. You can also specify additional private IPv4 addresses, known as secondary private IPv4 addresses. Unlike primary private IP addresses, secondary private IP addresses can be reassigned from one instance to another. For more information, see Multiple IP addresses.

A private IPv4 address, regardless of whether it is a primary or secondary address, remains associated with the network interface when the instance is stopped and started, or hibernated and started, and is released when the instance is terminated.

TLS Termination (Offload TLS)

TLS Termination is an approach that we use to offload the decryption/encryption of TLS traffic

TLS evolved from a previous encryption protocol called Secure Sockets Layer (SSL), which was developed by Netscape.

With Network Load Balancer, you can offload the decryption/encryption of TLS traffic from your application servers to the Network Load Balancer, which helps you optimize the performance of your backend application servers while keeping your workloads secure. Additionally, Network Load Balancers preserve the source IP of the clients to the back-end applications, while terminating TLS on the load balancer. Steps to do SSL termination

- Configure an SSL/TLS certificate on an Application Load Balancer via AWS Certificate Manager (ACM)

- Create an HTTPS listener on the Application Load Balancer with SSL termination

- The Application Load Balancersupports TLS offloading

- The Classic Load Balancer supports SSL offloading.

Further reading: How can I associate an ACM SSL/TLS certificate with a Classic, Application, or Network Load Balancer?

Behaviour

Deregistration

Deregistering an EC2 instance removes it from your load balancer. The load balancer stops routing requests to an instance as soon as it is deregistered. If demand decreases, or you need to service your instances, you can deregister instances from the load balancer. An instance that is deregistered remains running, but no longer receives traffic from the load balancer, and you can register it with the load balancer again when you are ready.

Elastic Load Balancing stops sending requests to targets that are deregistering. By default, Elastic Load Balancing waits 300 seconds before completing the deregistration process, which can help in-flight requests to the target to complete.

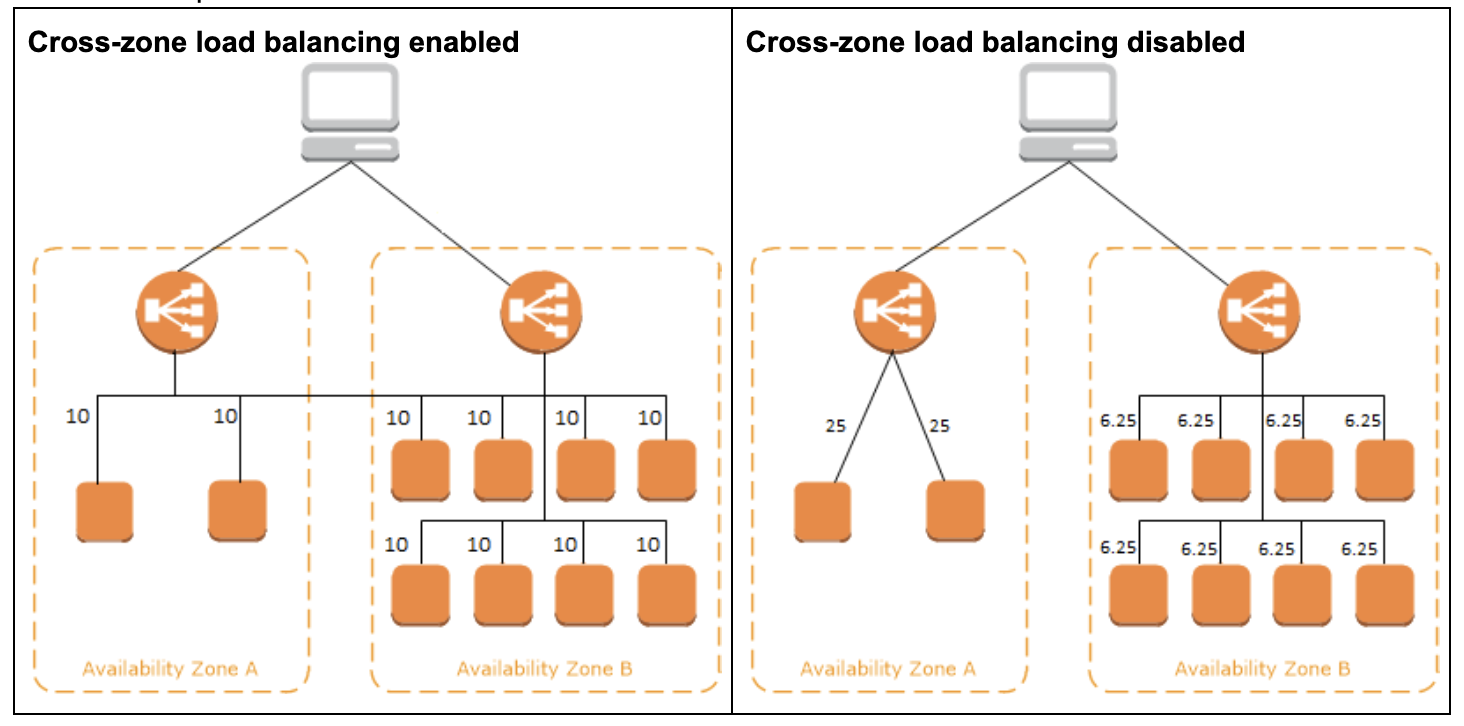

Cross-Zone Load Balancing

If you enable multiple Availability Zones for your load balancer, this increases the fault tolerance of your applications.

- By default, cross-zone load balancing is enabled (always on) for ALB and disabled for NLB

- You cannot disable Availability Zones for a NLB after you create it

Example 1:

- With cross-zone load balancing, one instance in Availability Zone A receives 20% traffic and four instances in Availability Zone B receive 20% traffic each.

- Without cross-zone load balancing, one instance in Availability Zone A receives 50% traffic and four instances in Availability Zone B receive 12.5% traffic each

Example 2:

Ephemeral port range of ELB

The client that initiates the request chooses the ephemeral port range. The range varies depending on the client's operating system. Requests originating from Elastic Load Balancing use ports 1024-65535. So remember to add a rule to the Network ACLs to allow outbound traffic on ports 1024 - 65535 to send the result back to a client.

For a detailed explaination, visit Ephemeral port : What is it and what does it do?

Target group contains only unhealthy targets?

If a target group contains only unhealthy targets, ELB routes requests across its unhealthy targets. It assumes that the health check is wrong, but maybe the instance can still work for some cases. This is a best effort scenario.

Configuration & Features

Connection Draining

To ensure that an Elastic Load Balancer stops sending requests to instances that are de-registering or unhealthy while keeping the existing connections open, use connection draining. This enables the load balancer to complete in-flight requests made to instances that are de-registering or unhealthy.

Idle Timeout

Idle means spending time to do nothing

For each request that a client makes through an Elastic Load Balancer, the load balancer maintains two connections.

- The front-end connection is between the client and the load balancer.

- The back-end connection is between the load balancer and a registered EC2 instance.

The load balancer has a configured "idle timeout" period that applies to its connections. If no data has been sent or received by the time that the "idle timeout" period elapses, the load balancer closes the connection

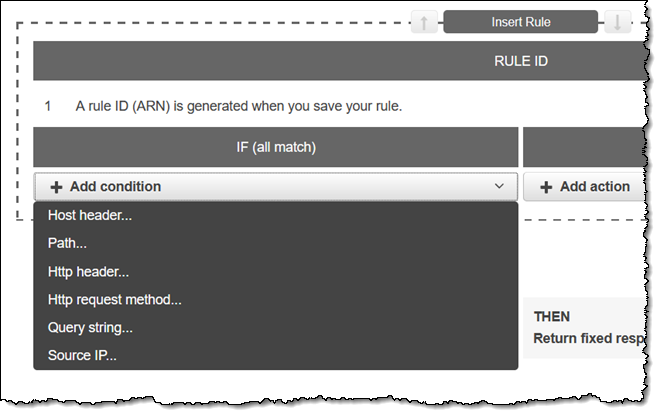

Listener rules

Listener rules are setup on ALB allow you to define how incoming traffic is routed based on various conditions. Listener rules are configured on the listener level and determine which target group should receive the incoming request based on criteria such as the request path, host header, HTTP headers, query parameters, or source IP address.

By defining specific rules, you can implement routing policies, redirect requests, or conditionally route traffic to different target groups. Listener rules provide flexibility in directing traffic to different application components based on specific conditions, enabling you to build dynamic and customized routing configurations.

Resource: New – Advanced Request Routing for AWS Application Load Balancers

Rule Condition types

There are 3 conditions that you can config.

- host-based routing: api.smart.com and mobile.smart.com

- path-based routing /api and /mobile

- Query string routing

Reference: Offical document

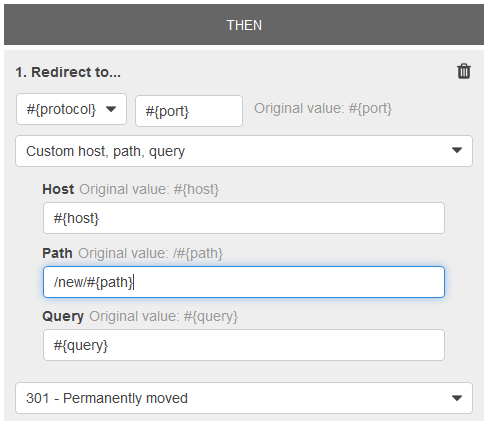

Redirect actions for an ALB

You can use query string conditions to configure rules that route requests based on key/value pairs or values in the query string.

The example condition given in the question is satisfied by requests with a query string that includes either a key/value pair of "version=v1" or any key set to "example".

[

{

"Field": "query-string",

"QueryStringConfig": {

"Values": [

{

"Key": "version",

"Value": "v1"

},

{

"Value": "*example*"

}

]

}

}

]

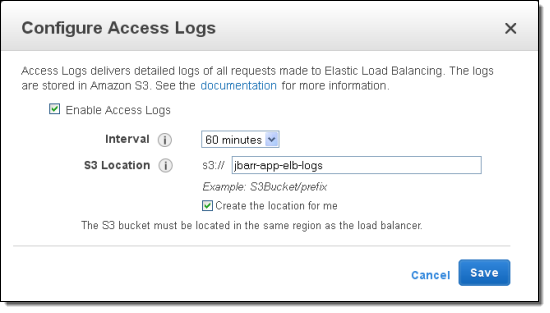

ELB access logs

ELB access logs is an optional feature of Elastic Load Balancing that is disabled by default. The access logs capture detailed information about requests sent to your load balancer. Each log contains information such as

- the time the request was received

- the client's IP address, latencies

- request paths

- server responses

You can use these access logs to analyze traffic patterns and troubleshoot issues. Each access log file is automatically encrypted using SSE-S3 before it is stored in your S3 bucket and decrypted when you access it. You do not need to take any action; the encryption and decryption is performed transparently.

You cannot use CloudTrail Logs to analyze the network traffic passing through the ELB.

Target group settings

Slow start mode

Slow start is a feature in AWS load balancer target groups that controls the gradual ramp-up of traffic to newly registered or recovered targets. When targets are added to a target group or become healthy after being unhealthy, the slow start feature allows the load balancer to gradually increase the number of requests it sends to those targets over a specified duration.

This helps to prevent overwhelming the newly added or recovered targets with a sudden surge of traffic, allowing them to warm up and stabilize before handling full load. Slow start helps maintain the overall stability and performance of the application during these transitional periods.

Request Routing Algorithms

There are 2 algorithms which only work for ALB/CLB.

- Least Oustanding requests: The next instance to receive the request is the instance that has the lowest number of pending/unfinished requests.

- Round Robin: Equally choose the targets from the target group.

- Servers are assigned in a repeating sequence, so that the next server assigned is guaranteed to be the least recently used.

There is only one algorithm which only work for NLB

- Flow Hash(Consistent hashing). The algorithm selects a target based on the protocol, source/destination IP address, source/destination port, and TCP sequence number. Each TCP/UDP connection is routed to a single target for the life of the connection.

- Similar to database sharding, the server can be assigned consistently based on IP address or URL.

Troubleshooting

Request of true IP address

The X-Forwarded-For request header is automatically added and helps you identify the IP address of a client when you use an HTTP or HTTPS load balancer. Because load balancers intercept traffic between clients and servers, your server access logs contain only the IP address of the load balancer.

Elastic Load Balancing stores the IP address of the client in the X-Forwarded-For request header and passes the header to your server.

Healthy status but receiving timeout

Imagine a health check is configured to ping the index.html page found in the root directory for the health status. When accessing the website via the internet visitors of the website receive timeout errors.

Check the security group rules of your EC2 instance. You need a security group rule that allows inbound traffic from your public IPv4 address on the proper port.

A security group acts as a virtual firewall for your EC2 instances to control incoming and outgoing traffic. Inbound rules control the incoming traffic to your instance, and outbound rules control the outgoing traffic from your instance.

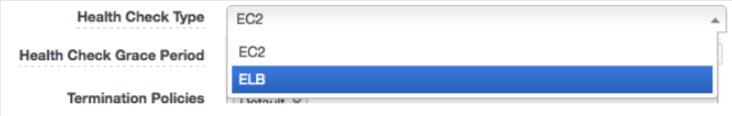

ASG doesn't replaced a unhealthy instance

In order to automate the replacement of unhealthy instance. The health check type of your instance's Auto Scaling group, must be changed from EC2 to ELB by using a configuration file

By default, the health check configuration of your Auto Scaling group is set as an EC2 type that performs a status check of EC2 instances. To automate the replacement of unhealthy EC2 instances, you must change the health check type of your instance's Auto Scaling group from EC2 to ELB by using a configuration file.

Please remember, the health check type of your instance's Auto Scaling group is strongly recommended to use ELB instead of EC2. So that the health status will keep in sync in both ELB and ASG.

Reference: EC2 vs. ELB Health Check on an Auto Scaling Group

By setting the Health Check Type to ELB, we can be sure that if the ELB health check is failing, the instance will be terminated and a new one will take it’s place, giving you true failover in the event that your application goes down. As a bonus, if the EC2 instance itself goes down, you will still get the proper failover from an ELB health check because your application will be unreachable to the ELB, deemed unhealthy, and subsequently destroyed by the auto scaling group - the best of both worlds.

Q: An e-commerce company has a fleet of EC2 based web servers running into very high CPU utilization issues. The development team has determined that serving secure traffic via HTTPS is a major contributor to the high CPU load. Which of the following steps can take the high CPU load off the web servers? (Select two)

A:

- Configure an SSL/TLS certificate on an Application Load Balancer via AWS Certificate Manager (ACM)

- Create an HTTPS listener on the Application Load Balancer with SSL termination

To use an HTTPS listener, you must deploy at least one SSL/TLS server certificate on your load balancer. You can create an HTTPS listener, which uses encrypted connections (also known as SSL offload). This feature enables traffic encryption between your load balancer and the clients that initiate SSL or TLS sessions.

As the EC2 instances are under heavy CPU load, the load balancer will use the server certificate to terminate the front-end connection and then decrypt requests from clients before sending them to the EC2 instances.

Please review this resource to understand how to associate an ACM SSL/TLS certificate with an Application Load Balancer: https://aws.amazon.com/premiumsupport/knowledge-center/associate-acm-certificate-alb-nlb/

Reference: What is SSL Termination Load Balancer?

SSL termination at load balancer is desired because decryption is resource and CPU intensive. Putting the decryption burden on the load balancer enables the server to spend processing power on application tasks, which helps improve performance. It also simplifies the management of SSL certificates.

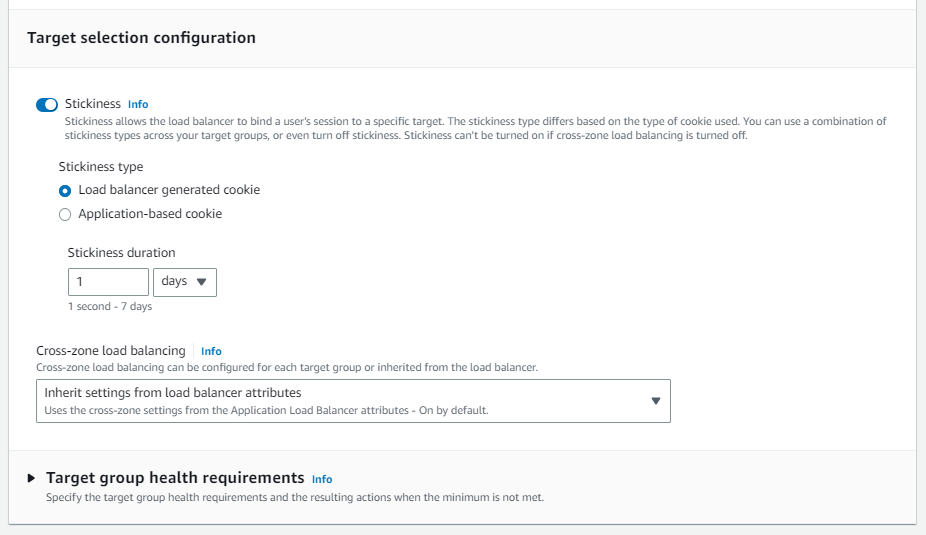

Unequal traffic routing

Here are the reasons that why an ELB routes more traffic to one instance or Availability Zone than the others.

- Clients are routing requests to an incorrect IP address of a load balancer node with a DNS record that has an expired TTL.

- Sticky sessions (session affinity) are enabled for the load balancer. Sticky sessions use cookies to help the client maintain a connection to the same instance over a cookie's lifetime, which can cause imbalances over time.

- Available healthy instances aren’t evenly distributed across Availability Zones.

- Instances of a specific capacity type aren’t equally distributed across Availability Zones.

- There are long-lived TCP connections between clients and instances.

- The connection uses a WebSocket.

How to solve unequal distribution traffic ELB?

If there is an unequal distribution of load due to stickiness, you can rebalance the load on your targets using the following two options:

- [Application side] Set an expiry on the cookie generated by the application that is prior to the current date and time. This will prevent clients from sending the cookie to the Application Load Balancer, which will restart the process of establishing stickiness.

- [Load balance side] Set a very short duration on the load balancer’s application-based stickiness configuration, for example, 1 second. This forces the Application Load Balancer to reestablish stickiness even if the cookie set by the target has not expired.

Error codes

TL;DR - 4XX codes are client-side errors and 5xx errors are server(target) problems

- HTTP 500

- HTTP 500 indicates 'Internal server' error

- Several reasons:

- A client submitted a request without an HTTP protocol

- The load balancer was unable to generate a redirect URL

- There was an error executing the web ACL rules

- HTTP 503

- HTTP 503 indicates 'Service unavailable' error

- This error in ALB is an indicator of the target groups for the load balancer having no registered targets.

- HTTP 504

- HTTP 504 indicates 'Gateway timeout' error

- Several reasons:

- The load balancer failed to establish a connection to the target before the connection timeout expired

- The load balancer established a connection to the target but the target did not respond before the idle timeout period elapsed

- HTTP 400 indicates 'The client sent a malformed request that does not meet HTTP specifications.'

- HTTP 403

- HTTP 403 is 'Forbidden' error.

- You configured an AWS WAF web access control list (web ACL) to monitor requests to your ALB and it blocked a request.

Best practice

How many load balancers do I need?

As a best practice, you want at least two load balancers in a clustered pair. If you only have a single load balancer and it fails for any reason, then your whole system will fail. This is known as a Single Point of Failure (SPOF). With load balancers, the number you require depends on how much traffic you handle and how much uptime availability you want. Generally, the more load balancers you have, the better.

Don’t resolve the ALB’s public IP

The load balancer is highly available and its public IP may change. The DNS name is constant.

When your load balancer is created, it receives a public DNS name that clients can use to send requests. The DNS servers resolve the DNS name of your load balancer to the public IP addresses of the load balancer nodes for your load balancer. Never resolve the IP of a load balancer as it can change with time. You should always use the DNS name.

How to handle expected flash traffic

ELB can handle the vast majority of use cases for the customers without requiring "pre-warming" (configuring the load balancer to have the appropriate level of capacity based on expected traffic).

In certain scenarios, such as when flash traffic is expected, or in the case where a load test cannot be configured to gradually increase traffic, AWS recommends that you contact AWS to have your load balancer "pre-warmed". AWS will then configure the load balancer to have the appropriate level of capacity based on the traffic that you expect.

Layer 4 Load Balancing vs. Layer 7 Load Balancing

An Layer 4 load balancer works at the transport layer, using the TCP and UDP protocols to manage transaction traffic based on a simple load balancing algorithm and basic information such as server connections and response times.

An Layer 7 load balancer works at the application layer---the highest layer in the OSI model---and makes its routing decisions based on more detailed information such as the characteristics of the HTTP/HTTPS header, message content, URL type, and cookie data.

Layer 4 Load Balancing

In AWS, it is NLB which only handle TCP and UDP

Layer 4 load balancing, operating at the transport level, manages traffic based on network information such as application ports and protocols without visibility into the actual content of messages. This is an effective approach for simple packet-level load balancing. The fact that messages are neither inspected nor decrypted allows them to be forwarded quickly, efficiently, and securely.

On the other hand, because layer 4 load balancing is unable to make decisions based on content, it's not possible to route traffic based on media type, localization rules, or other criteria beyond simple algorithms such as round-robin routing.

Layer 7 Load Balancing

In AWS, it is ALB which only handle HTTP and HTTPS

Layer 7 load balancing operates at the application level, using protocols such as HTTP and SMTP to make decisions based on the actual content of each message. Instead of merely forwarding traffic unread, a layer 7 load balancer terminates network traffic, performs decryption as needed, inspects messages, makes content-based routing decisions, initiates a new TCP connection to the appropriate upstream server, and writes the request to the server.