ECS & ECR

ECR

Amazon Elastic Container Registry (ECR) is a fully managed container registry service provided by AWS. It is designed to simplify the storage, management, and deployment of Docker container images for use with services like EKS, ECS, and more.

Required Permision

Amazon ECR users require permission to call ecr:GetAuthorizationToken before they can authenticate to a registry and push or pull any images from any Amazon ECR repository. Amazon ECR provides several managed policies to control user access at varying levels

Pull docker images from ECR

You have to run these 2 commands to get The get-login command retrieves a token that is valid for a specified registry for 12 hours, and then it prints a docker login command with that authorization token. You can execute the printed command to log in to your registry with Docker, or just run it automatically using the $() command wrapper.

After you have logged in to an Amazon ECR registry with this command, you can use the Docker CLI to push and pull images from that registry until the token expires. The docker pull command is used to pull an image from the ECR registry.

# Step 1

$(aws ecr get-login --no-include-email)

# Step 2

docker pull 1234567890.dkr.ecr.eu-west-1.amazonaws.com/demo:latest

The ECS Stack

The following stack shows how we deploy an app container into ECS.

Containers

To deploy applications on Amazon ECS, your application components must be architected to run in containers. This can be done using Dockerfile, which defines a container image. These images can be hosted on a registry like ECR, more details below. ECS will be running the containers from some registry.

Service

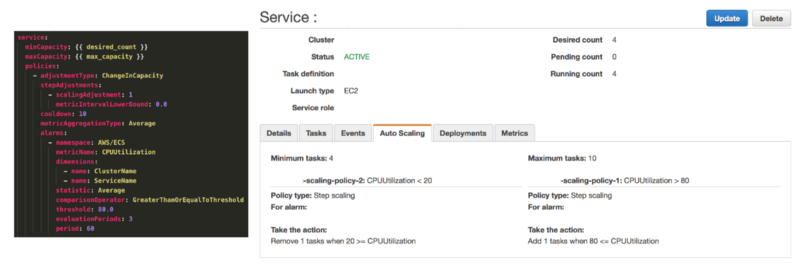

Source: Load Balanced and Auto Scaling containerized app with AWS ECS

A service defines the minimum and maximum number of tasks to run per each task definition at any one time. This includes things like auto-scaling and load balancing. The service is going to define how much performance the application will be running at – for example if the CPU utilization hits a certain threshold the Service can spin up more or less tasks.

{

"family": "webserver",

"containerDefinitions": [

{

"name": "web",

"image": "nginx",

"memory": "100",

"cpu": "99"

},

],

"requiresCompatibilities": [

"FARGATE"

],

"networkMode": "awsvpc",

"memory": "512",

"cpu": "256",

}

Task

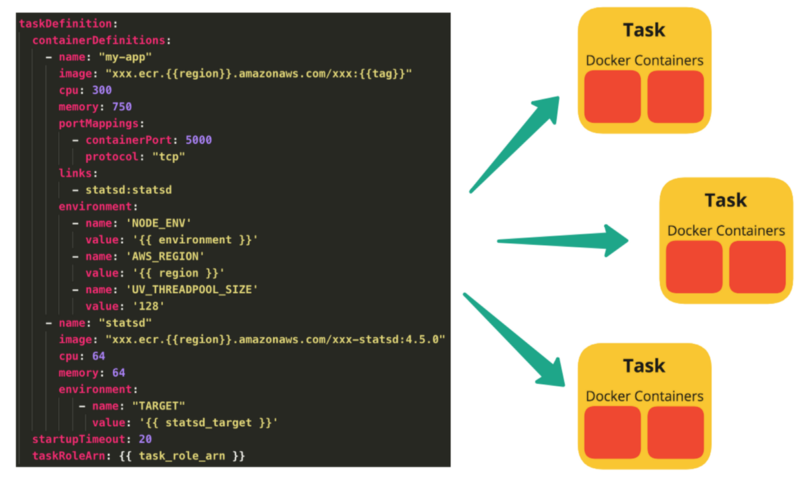

Source: Load Balanced and Auto Scaling containerized app with AWS ECS

To prepare your application to run on Amazon ECS, you create a task definition. The task definition is a text file, in JSON format, that describes one or more containers, up to a maximum of ten, that form your application. It can be thought of as a blueprint for your application.

Setting the desired count of an ECS task to more than 1 is crucial for ensuring high availability, load balancing, scalability, and fault tolerance. This configuration is necessary for critical applications where continuous uptime is essential, such as web servers, API services, and online platforms. It allows multiple instances of a task to run concurrently, distributing the load evenly and handling more traffic efficiently. Multiple task instances ensure redundancy, so if one instance fails, others continue to operate, maintaining service reliability. This setup is also beneficial for batch processing and parallelizing tasks to reduce processing time, as well as for performing rolling updates with zero downtime, ensuring seamless application maintenance and upgrades.

Also, the principles still apply when using the Fargate launch type in ECS. Even though Fargate abstracts away the underlying EC2 instances, the benefits of having multiple task instances remain the same.

Cluster

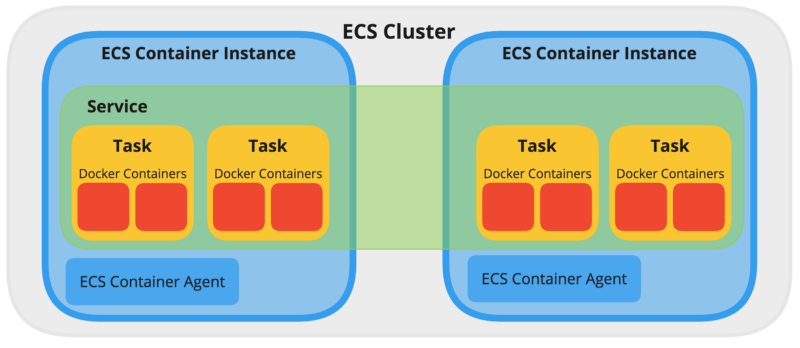

When you run tasks using Amazon ECS, you place them on a cluster, which is a logical grouping of resources. When using the Fargate launch type with tasks within your cluster, Amazon ECS manages your cluster resources. When using the EC2 launch type, then your clusters are a group of container instances you manage. An Amazon ECS container instance is an Amazon EC2 instance that is running the Amazon ECS container agent. Amazon ECS downloads your container images from a registry that you specify, and runs those images within your cluster.

Below is an example ECS cluster, with one Service running four Tasks across two ECS Container Instances/Nodes.

Source: Load Balanced and Auto Scaling containerized app with AWS ECS

Container Agent

The cluster is managed by the Container Agent. The container agent runs on each infrastructure resource within an Amazon ECS cluster. It sends information about the resource's current running tasks and resource utilization to Amazon ECS, and starts and stops tasks whenever it receives a request from Amazon ECS. For more information, see Amazon ECS Container Agent.

EC2 Launch Type

A terminated container instance resist in ECS cluster

Q: A developer terminated a container instance in Amazon ECS, but the container instance continues to appear as a resource in the ECS cluster. How to fix it?

TL;DR - You can't terminate EC2 instance if the EC2 instance is

STOPPEDstate in ECS becuase the state won't sync

Ans: You terminated the container instance while it was in STOPPED state, that lead to this synchronization issues - If you terminate a container instance while it is in the STOPPED state, that container instance isn't automatically removed from the cluster. You will need to deregister your container instance in the STOPPED state by using the Amazon ECS console or AWS Command Line Interface. Once deregistered, the container instance will no longer appear as a resource in your Amazon ECS cluster.

Container agent configuration

If your container instance was launched with a Linux variant of the Amazon ECS-optimized AMI, you can set these environment variables in the /etc/ecs/ecs.config file and then restart the agent. You can also write these configuration variables to your container instances with Amazon EC2 user data at launch time. For more information, see Bootstrapping container instances with Amazon EC2 user data.

To check the full list, visit Amazon ECS container agent configuration

ECS_ENABLE_TASK_IAM_ROLE This configuration item is used to enable IAM roles for tasks for containers with the bridge and default network modes. (In plain english - This allows ECS tasks to use IAM roles)

ECS_ENGINE_AUTH_DATA This refers to the authentication data within a Docker configuration file, so this is not the correct option.

ECS_AVAILABLE_LOGGING_DRIVERS The Amazon ECS container agent running on a container instance must register the logging drivers available on that instance with this variable. This configuration item refers to the logging driver.

ECS_CLUSTER This refers to the ECS cluster that the ECS agent should check into. This is passed to the container instance at launch through Amazon EC2 user data.

Bootstrapping container

The Linux variants of the Amazon ECS-optimized AMI look for agent configuration data in the /etc/ecs/ecs.config file when the container agent starts. You can specify this configuration data at launch with Amazon EC2 user data. For more information about available Amazon ECS container agent configuration variables, see Amazon ECS container agent configuration.

To set only a single agent configuration variable, such as the cluster name, use echo to copy the variable to the configuration file:

#!/bin/bash

echo "ECS_CLUSTER=`MyCluster`" >> /etc/ecs/ecs.config

If you have multiple variables to write to /etc/ecs/ecs.config, use the following heredoc format. This format writes everything between the lines beginning with cat and EOF to the configuration file.

#!/bin/bash

cat <<'EOF' >> /etc/ecs/ecs.config

ECS_CLUSTER=MyCluster

ECS_ENGINE_AUTH_TYPE=docker

ECS_ENGINE_AUTH_DATA={"https://index.docker.io/v1/":{"username":"my_name","password":"my_password","email":"email@example.com"}}

ECS_LOGLEVEL=debug

EOF

REPLICA vs DAEMON service type

If you have an ECS cluster with three EC2 instances and you want to launch a new service with four tasks, the following will happen:

- Replica

- Daemon

Definition: The replica scheduling strategy places and maintains the desired number of tasks across your cluster. By default, the service scheduler spreads tasks across Availability Zones. You can use task placement strategies and constraints to customize task placement decisions

Your four tasks will start randomly distributed over your container instances. This can be all four on one instance or any other random distribution. This is the use case for normal micro services.

Definition: The daemon scheduling strategy deploys exactly one task on each active container instance that meets all of the task placement constraints that you specify in your cluster. When using this strategy, there is no need to specify a desired number of tasks, a task placement strategy, or use Service Auto Scaling policies.

For a daemon you do not specify how many tasks you want to run. A daemon service automatically scales depending on the amount of EC2 instances you have. In this case, three. A daemon task is a pattern used when building microservices where a task is deployed onto each instance in a cluster to provide common supporting functionality like logging, monitoring, or backups for the tasks running your application code.

Further reading:

- Slackoverflow - What is difference between REPLICA and DAEMON service type in Amazon EC2 Container Service?

- AWS documentation

Fargate launch type

Use the Fargate launch type when you want to run containers without managing the underlying infrastructure, allowing you to focus solely on your application. When using the EC2 launch type in Amazon ECS, you need to manage the provisioning and scaling of EC2 instances, including selecting the appropriate instance types and sizes. You are responsible for capacity management, ensuring you have enough instances to handle your workload, and configuring auto-scaling groups. Additionally, you must handle instance maintenance tasks such as patching, monitoring, and handling instance failures.

When to Use Fargate/EC2 launch type

The Fargate launch type is ideal when you want to avoid managing the underlying infrastructure. It abstracts away the complexities of server management, making it easier to deploy and scale applications. This is especially beneficial for dynamic and variable workloads, microservices architectures, and when operational simplicity is crucial.

The EC2 launch type is suitable when you need more control over the underlying instances, such as specifying instance types, managing network configurations, and optimizing for cost by using Spot instances. This option is typically preferred for steady-state, predictable workloads that can benefit from the cost efficiency of fully utilizing EC2 instances.

- Considerations

- Operational Overhead: Fargate reduces operational overhead since AWS manages the infrastructure, including patching and maintenance.

- Cost Efficiency: For highly utilized, steady-state applications, EC2 can be more cost-effective. However, Fargate offers cost efficiency for dynamic workloads where over-provisioning of EC2 instances would be costly.

- Scalability and Flexibility: Fargate provides seamless scaling and isolation for tasks, making it ideal for microservices and batch processing tasks.

There are 2 scenario when you increase the desired tasks

- Scenario: EC2 Launch Type

- Desired Tasks: When you increase the desired tasks to 2, ECS tries to run 2 instances of the task.

- EC2 Instances: If your existing EC2 instances have enough resources (CPU, memory) to accommodate the additional task, ECS will place the new task on the existing instances. Hence, you might not see an increase in the number of EC2 instances.

- Scaling: If the existing instances do not have enough resources, you would need to scale the Auto Scaling Group (ASG) manually or through auto-scaling policies.

- Scenario: Fargate Launch Type

- Desired Tasks: When you increase the desired tasks to 2, Fargate handles provisioning the necessary compute resources.

- Infrastructure: You won't see any EC2 instances because Fargate abstracts the infrastructure layer. AWS manages the underlying infrastructure for you.

When to put the two containers into a single task definition

When architecting your application to run on Amazon ECS using AWS Fargate, you must decide between deploying multiple containers into the same task definition and deploying containers separately in multiple task definitions.

If the following conditions are required, we recommend deploying multiple containers into the same task definition:

- Your containers share a common lifecycle (that is, they're launched and terminated together).

- Your containers must run on the same underlying host (that is, one container references the other on a localhost port).

- You require that your containers share CPU and memory resources.

- Your containers share data volumes.

If these conditions aren't required, we recommend deploying containers separately in multiple task definitions. This is because, by doing so, you can scale, provision, and deprovision them separately.

Source: Using the Fargate launch type